CitySEIRCast: an agent-based city digital twin for pandemic

analysis and simulation

Shakir Bilal, Wajdi Zaatour, Yilian Alonso Otano, Arindam Saha,

Ken Newcomb, Soo Kim, Dongjun Kim, Raveena

Ginjala, Derek Groen, Edwin Michael

- Background

- High‑resolution city‑level epidemic forecasting is needed.

- Problem Definition

- Agent‑based modeling + real‑world city data → timely forecasts.

- Method

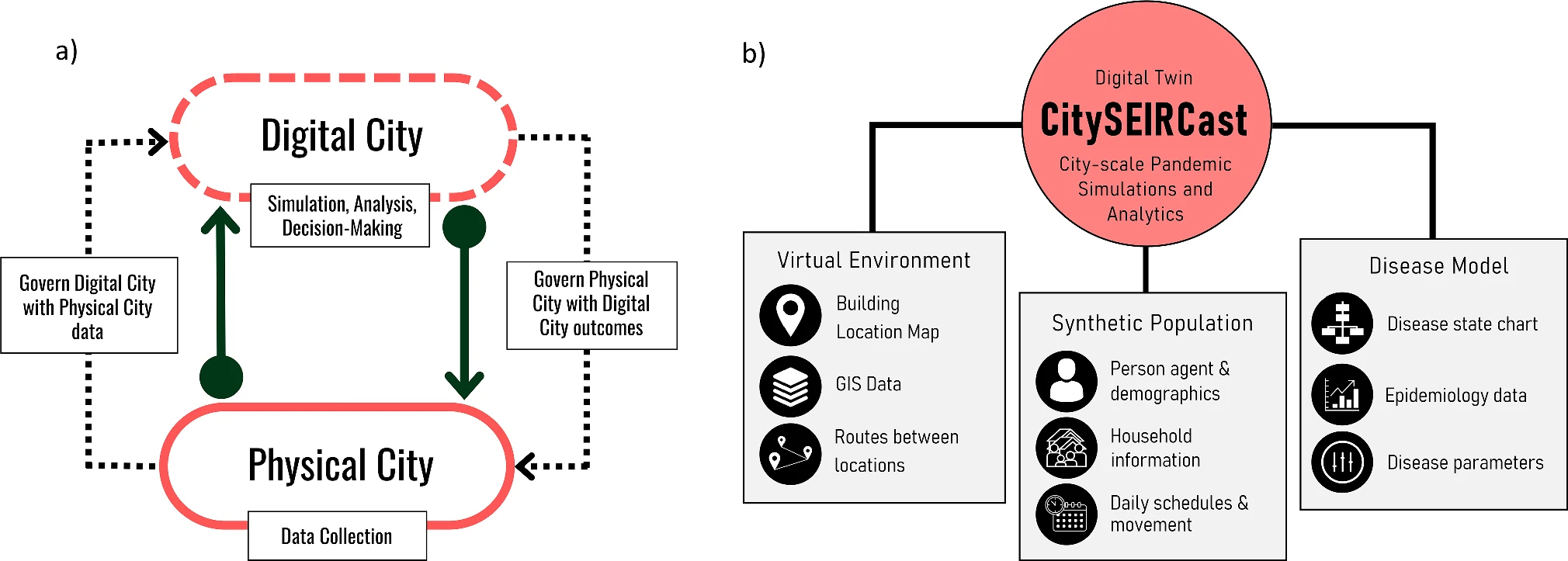

- City digital twin + agent‑based SEIR

- Automated, scalable pipelines on hybrid cloud/HPC

- Results

- Realistic city‑level forecasts

- Actionable for policy planning

- Role

- Data analysis

- HPC setup

- Python and C++

- App build

- Azure hosting